Overview

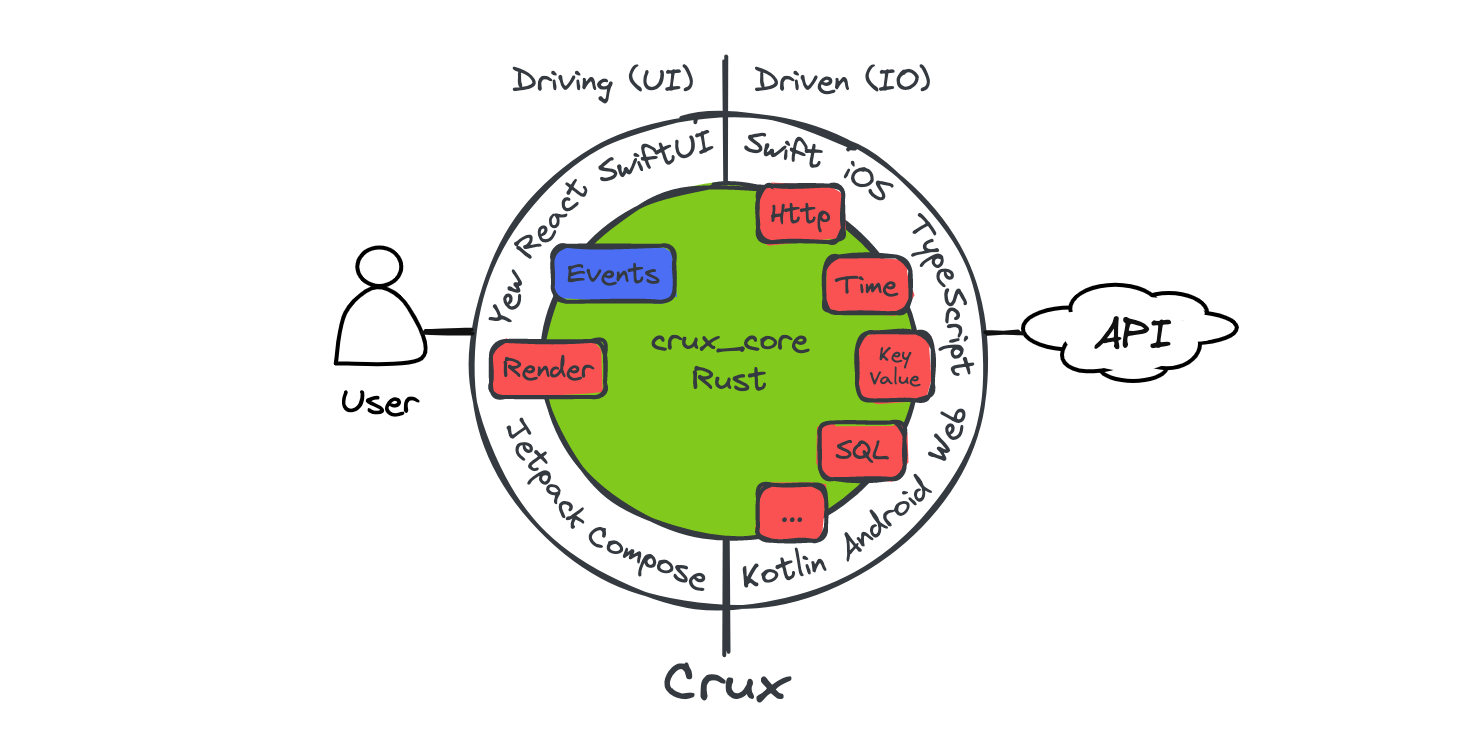

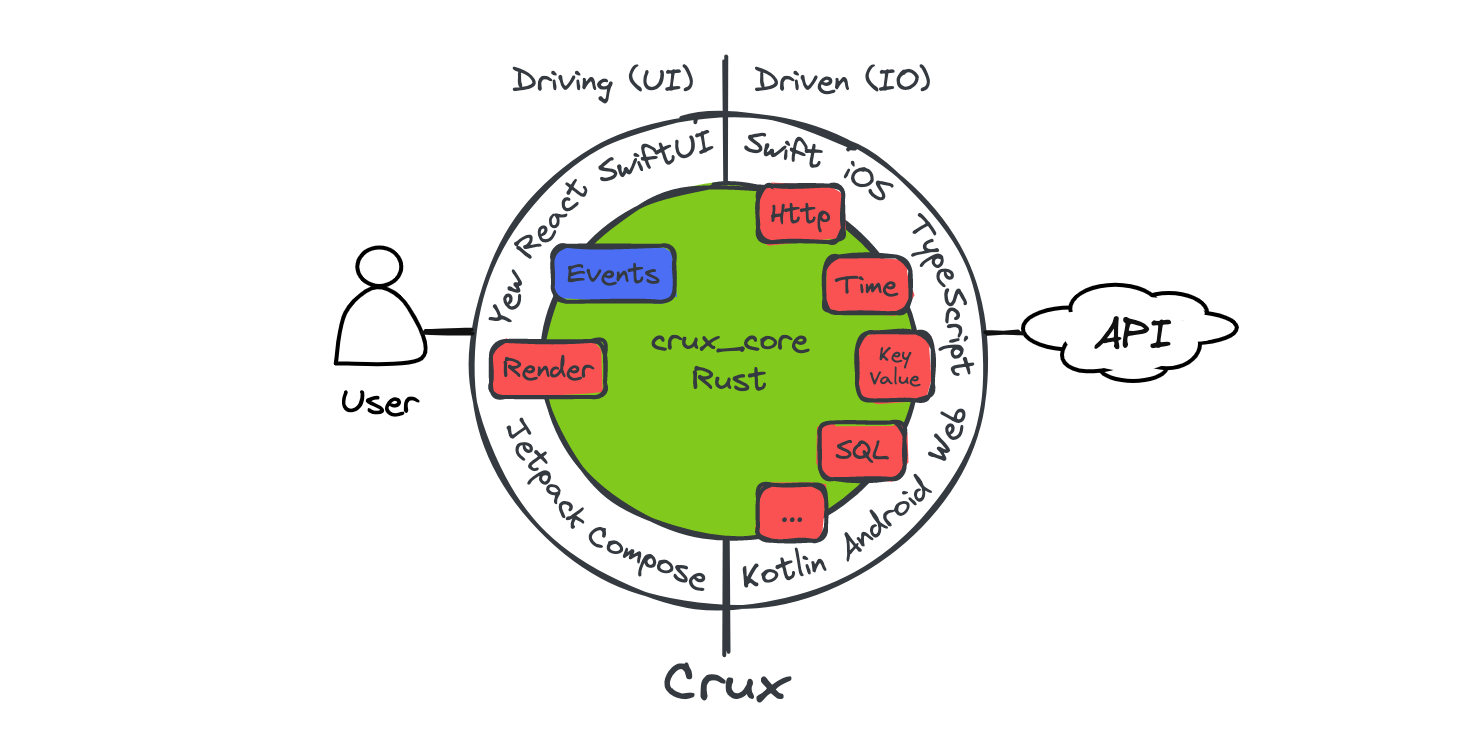

Crux is a framework for building cross-platform applications with better testability, higher code and behavior reuse, better safety, security, and more joy from better tools.

It splits the application into two distinct parts, a Core built in Rust, which drives as much of the business logic as possible, and a Shell, built in the platform native language (Swift, Kotlin, TypeScript), which provides all interfaces with the external world, including the human user, and acts as a platform on which the core runs.

The interface between the two is a native FFI (Foreign Function Interface) with cross-language type checking and message passing semantics, where simple data structures are passed across the boundary.

To get playing with Crux quickly, follow the Getting Started steps. If you prefer to read more about how apps are built in Crux first, read the Development Guide. And if you'd like to know what possessed us to try this in the first place, read about our Motivation.

There are two places to find API documentation: the latest published version on docs.rs, and we also have the very latest master docs if you too like to live dangerously.

- crux_core - the main Crux crate: latest release | latest master

- crux_http - HTTP client capability: latest release | latest master

- crux_kv - Key-value store capability: latest release | latest master

- crux_time - Time capability: latest release | latest master

You can see the latest version of this book (generated from the master branch) on Github Pages.

Crux is open source on Github. A good way to learn Crux is to explore the code, play with the examples, and raise issues or pull requests. We'd love you to get involved.

You can also join the friendly conversation on our Zulip channel.

Design overview

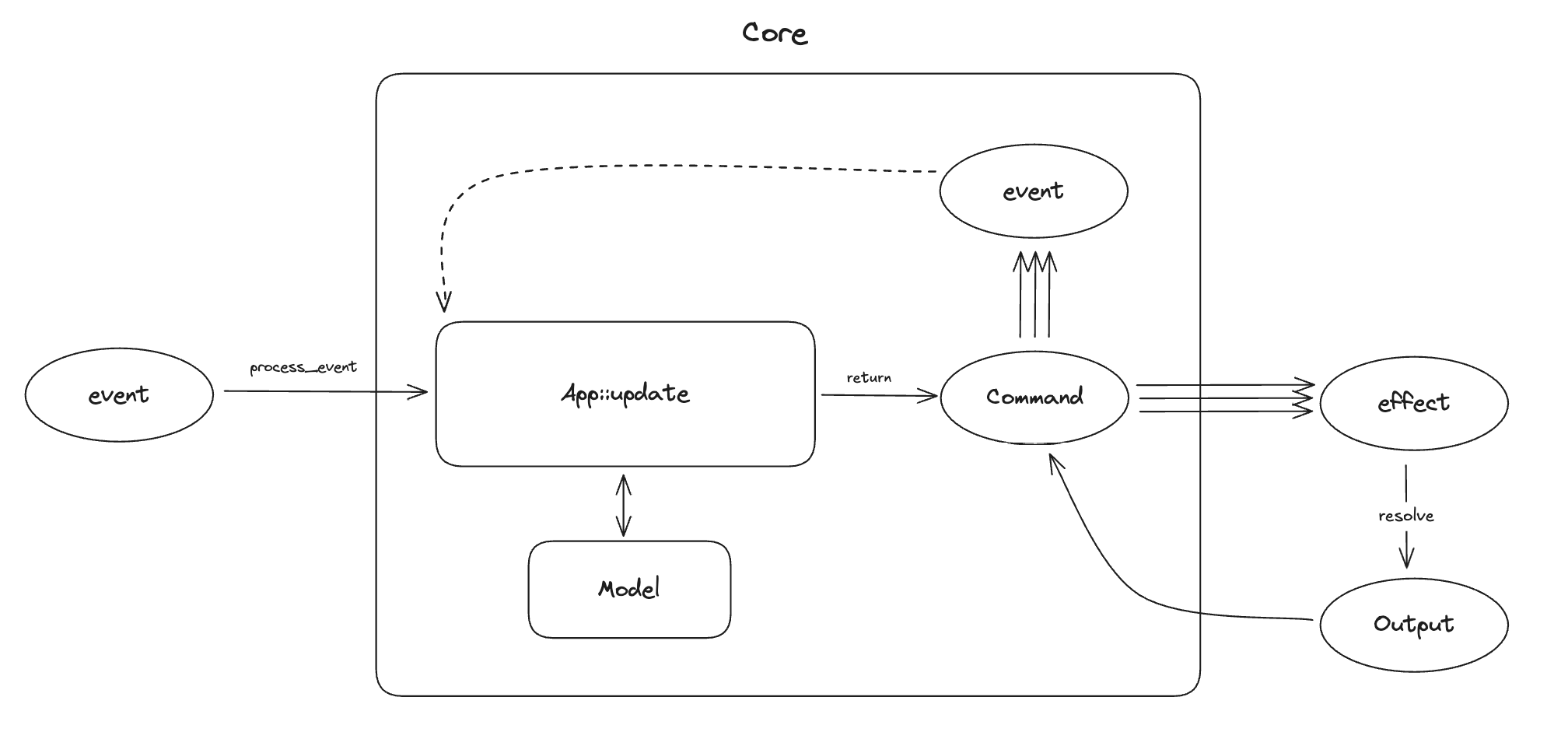

The architecture is event-driven, based on event sourcing. The Core holds the majority of state, which is updated in response to events happening in the Shell. The interface between the Core and the Shell is message-based.

The user interface layer is built natively, with modern declarative UI frameworks such as Swift UI, Jetpack Compose and React/Vue or a WASM based framework on the web. The UI layer is as thin as it can be, and all other application logic is performed by the shared Core. The one restriction is that the Core is side–effect free. This is both a technical requirement (to be able to target WebAssembly), and an intentional design goal, to separate logic from effects and make them both easier to test in isolation.

The core requests side-effects from the Shell through common capabilities. The basic concept is that instead of doing the asynchronous work, the core describes the intent for the work with data, and passes this to the Shell to be performed. The Shell performs the work, and returns the outcomes back to the Core. This approach is inspired by Elm, and similar to how other purely functional languages deal with effects and I/O (e.g. the IO monad in Haskell). It is also similar to how iterators work in Rust.

The Core exports types for the messages it can understand. The Shell can call the Core and pass one of the messages. In return, it receives a set of side-effect requests to perform. When the work is completed, the Shell sends the result back into the Core, which responds with further requests if necessary.

Updating the user interface is considered one of the side-effects the Core can request. The entire interface is strongly typed and breaking changes in the core will result in build failures in the Shell.

Goals

We set out to find a better way of building apps across platforms. You can read more about our motivation. The overall goals of Crux are to:

- Build the majority of the application code once, in Rust

- Encapsulate the behavior of the app in the Core for reuse

- Follow the Ports and Adapters pattern, also known as Hexagonal Architecture to facilitate pushing side-effects to the edge, making behavior easy to test

- Separate the behavior from the look and feel and interaction design

- Use the native UI tool kits to create user experience that is the best fit for a given platform

Path to 1.0

Crux is used in production apps today, and we consider it production ready. However, we still have a number of things to work on to call it 1.0, with a stable API, and other things one would expect from a mature framework.

Below is a list of some of the things we know we want to do before 1.0:

- Improved documentation, code examples, and example apps for newcomers

- Improved onboarding experience, with less boilerplate code that end users have to deal with

- Better FFI code generation to enable support for more languages (e.g. C#, Dart, even C++...) and in trurn more Shells (e.g. .NET, Flutter) which will also enable Desktop apps for Windows

- Revised capabilities and effects to allow for better, more natural app composition in larger apps, for composing capabilities, and generally for a more ergonomic effect API overall

Until then, we hope you will work with us on the rough edges, and adapt to the necessary API updates as we evolve. We strive to minimise the impact of changes as much as we can, but before 1.0, some breaking changes will be unavoidable.

Motivation

We set out to prove this approach to building apps largely because we've seen the drawbacks of all the other approaches in real life, and thought "there must be a better way". The two major available approaches to building the same application for iOS and Android are:

- Build a native app for each platform, effectively doing the work twice.

- Use React Native or Flutter to build the application once1 and produce native looking and feeling apps which behave nearly identically.

The drawback of the first approach is doing the work twice. In order to build every feature for iOS and Android at the same time, you need twice the number of people, either people who happily do Swift and Kotlin (and they are very rare), or more likely a set of iOS engineers and another set of Android engineers. This typically leads to forming two separate, platform-focused teams. We have witnessed situations first-hand, where those teams struggle with the same design problems, and despite one encountering and solving the problem first, the other one can learn nothing from their experience (and that's despite long design discussions).

We think such experiences with the platform native approach are common, and the reason why people look to React Native and Flutter. The issues with React Native are two fold

- Only mostly native user interface

- In the case of React Native, the JavaScript ecosystem tooling disaster

React Native effectively takes over, and works hard to insulate the engineer from the native platform underneath and pretend it doesn't really exist, but of course, inevitably, it does and the user interface ends up being built in a combination of 90% JavaScript/TypeScript and 10% Kotlin/Swift. This was still a major win when React Native was first introduced, because the platform native UI toolkits were imperative, following a version of MVC architecture, and generally made it quite difficult to get UI state management right. React on the other hand is declarative, leaving much less space for errors stemming from the UI getting into an undefined state. This benefit was clearly recognised by iOS and Android, and both introduced their own declarative UI toolkit - Swift UI and Jetpack Compose. Both of them are quite good, matching that particular advantage of React Native, and leaving only building things once (in theory). But in exchange, they have to be written in JavaScript (and adjacent tools and languages).

The main issue with the JavaScript ecosystem is that it's built on sand. The

underlying language is quite loose and has a

lot of inconsistencies. It came

with no package manager originally, now it

has three. To serve code to the

browser, it gets bundled, and the list of bundlers is too long to include here.

Webpack, the most popular one, is famously difficult

to configure. JavaScript was built as a dynamic language which leads to a lot of

basic human errors, which are made while writing the code, only being discovered

when running the code. Static type systems aim to solve that problem and

TypeScript adds this onto JavaScript, but the

types only go so far (until they hit an any type, or dependencies with no type

definitions), and they disappear at runtime.

In short, upgrading JavaScript to something modern takes a lot of tooling. Getting all this tooling set up and ready to build things is an all day job, and so more tooling, like Next.js has popped up providing this configuration in a box, batteries included. Perhaps the final admission of this problem is the recent Biome toolchain (formerly the Rome project), attempting to bring all the various tools under one roof (and Biome itself is built in Rust...).

It's no wonder that even a working setup of all the tooling has sharp edges, and cannot afford to be nearly as strict as tooling designed with strictness in mind, such as Rust's. The heart of the problem is that computers are strict and precise instruments, and humans are sloppy creatures. With enough humans (more than 10, being generous) and no additional help, the resulting code will be sloppy, full of unhandled edge cases, undefined behaviour being relied on, circular dependencies preventing testing in isolation, etc. (and yes, these are not hypotheticals).

Contrast that with Rust, which is as strict as it gets, and generally backs up

the claim that if it compiles it will work (and if you struggle to get it past

the compiler, it's probably a bad idea). The tooling and package management is

built in with cargo. There are fewer decisions to make when setting up a Rust

project.

In short, we think the JS ecosystem has jumped the shark, the "complexity toothpaste" is out of the tube, and it's time to stop. But there's no real viable alternative. Crux is our attempt to provide one.

-

In reality it's more like 1.4x effort build the same app for two platforms. ↩

Shared core and types

These are the steps to set up the two crates forming the shared core – the core itself, and the shared types crate which does type generation for the foreign languages.

We're hoping to automate some of these steps in future tooling. For now the set up includes some copy & paste from one of the example projects.

Install the tools

This is an example of a

rust-toolchain.toml

file, which you can add at the root of your repo. It should ensure that the

correct rust channel and compile targets are installed automatically for you

when you use any rust tooling within the repo.

[toolchain]

channel = "stable"

components = ["rustfmt", "rustc-dev"]

targets = [

"aarch64-apple-darwin",

"aarch64-apple-ios",

"aarch64-apple-ios-sim",

"aarch64-linux-android",

"wasm32-unknown-unknown",

"x86_64-apple-ios",

]

profile = "minimal"

Create the core crate

The shared library

The first library to create is the one that will be shared across all platforms,

containing the behavior of the app. You can call it whatever you like, but we

have chosen the name shared here. You can create the shared rust library, like

this:

cargo new --lib shared

The workspace and library manifests

We'll be adding a bunch of other folders into the monorepo, so we are choosing

to use Cargo Workspaces. Edit the workspace /Cargo.toml file, at the monorepo

root, to add the new library to our workspace. It should look something like

this:

# /Cargo.toml

[workspace]

members = ["shared"]

resolver = "1"

[workspace.package]

authors = ["Red Badger Consulting Limited"]

edition = "2021"

repository = "https://github.com/redbadger/crux/"

license = "Apache-2.0"

keywords = ["crux", "crux_core", "cross-platform-ui", "ffi", "wasm"]

rust-version = "1.80"

[workspace.dependencies]

anyhow = "1.0.95"

crux_core = "0.12.0"

serde = "1.0.217"

The library's manifest, at /shared/Cargo.toml, should look something like the

following, but there are a few things to note:

- the

crate-typelibis the default rust library when linking into a rust binary, e.g. in theweb-yew, orcli, variantstaticlibis a static library (libshared.a) for including in the Swift iOS app variantcdylibis a C-ABI dynamic library (libshared.so) for use with JNA when included in the Kotlin Android app variant

- we need to declare a feature called

typegenthat depends on the feature with the same name in thecrux_corecrate. This is used by this crate's sister library (often calledshared_types) that will generate types for use across the FFI boundary (see the section below on generating shared types). - the uniffi dependencies and

uniffi-bindgentarget should make sense after you read the next section

# /shared/Cargo.toml

[package]

name = "shared"

version = "0.1.0"

authors.workspace = true

repository.workspace = true

edition.workspace = true

license.workspace = true

keywords.workspace = true

rust-version.workspace = true

[lib]

crate-type = ["lib", "staticlib", "cdylib"]

name = "shared"

[features]

typegen = ["crux_core/typegen"]

[dependencies]

crux_core.workspace = true

serde = { workspace = true, features = ["derive"] }

uniffi = "0.29.2"

wasm-bindgen = "0.2.100"

[target.uniffi-bindgen.dependencies]

uniffi = { version = "0.29.2", features = ["cli"] }

[build-dependencies]

uniffi = { version = "0.29.2", features = ["build"] }

FFI bindings

Crux uses Mozilla's Uniffi to generate the FFI bindings for iOS and Android.

Generating the uniffi-bindgen CLI tool

Since version 0.23.0 of Uniffi, we need to also generate the

binary that generates these bindings. This avoids the possibility of getting a

version mismatch between a separately installed binary and the crate's Uniffi

version. You can read more about it

here.

Generating the binary is simple, we just add the following to our crate, in a

file called /shared/src/bin/uniffi-bindgen.rs.

fn main() {

uniffi::uniffi_bindgen_main();

}And then we can build it with cargo.

cargo run -p shared --bin uniffi-bindgen

# or

cargo build

./target/debug/uniffi-bindgen

The uniffi-bindgen executable will be used during the build in XCode and in

Android Studio (see the following pages).

The interface definitions

We will need an interface definition file for the FFI bindings. Uniffi has its

own file format (similar to WebIDL) that has a .udl extension. You can create

one at /shared/src/shared.udl, like this:

namespace shared {

bytes process_event([ByRef] bytes msg);

bytes handle_response(u32 id, [ByRef] bytes res);

bytes view();

};

There are also a few additional parameters to tell Uniffi how to create bindings

for Kotlin and Swift. They live in the file /shared/uniffi.toml, like this

(feel free to adjust accordingly):

# /shared/uniffi.toml

[bindings.kotlin]

package_name = "com.example.simple_counter.shared"

cdylib_name = "shared"

[bindings.swift]

cdylib_name = "shared_ffi"

omit_argument_labels = true

Finally, we need a build.rs file in the root of the crate

(/shared/build.rs), to generate the bindings:

// /shared/build.rs

fn main() {

uniffi::generate_scaffolding("./src/shared.udl").unwrap();

}Scaffolding

Soon we will have macros and/or code-gen to help with this, but for now, we need

some scaffolding in /shared/src/lib.rs. You'll notice that we are re-exporting

the Request type and the capabilities we want to use in our native Shells, as

well as our public types from the shared library.

// /shared/src/lib.rs

pub mod app;

use std::sync::LazyLock;

pub use crux_core::{bridge::Bridge, Core, Request};

pub use app::*;

// TODO hide this plumbing

#[cfg(not(target_family = "wasm"))]

uniffi::include_scaffolding!("shared");

static CORE: LazyLock<Bridge<Counter>> = LazyLock::new(|| Bridge::new(Core::new()));

/// Ask the core to process an event

/// # Panics

/// If the core fails to process the event

#[cfg_attr(target_family = "wasm", wasm_bindgen::prelude::wasm_bindgen)]

#[must_use]

pub fn process_event(data: &[u8]) -> Vec<u8> {

match CORE.process_event(data) {

Ok(effects) => effects,

Err(e) => panic!("{e}"),

}

}

/// Ask the core to handle a response

/// # Panics

/// If the core fails to handle the response

#[cfg_attr(target_family = "wasm", wasm_bindgen::prelude::wasm_bindgen)]

#[must_use]

pub fn handle_response(id: u32, data: &[u8]) -> Vec<u8> {

match CORE.handle_response(id, data) {

Ok(effects) => effects,

Err(e) => panic!("{e}"),

}

}

/// Ask the core to render the view

/// # Panics

/// If the view cannot be serialized

#[cfg_attr(target_family = "wasm", wasm_bindgen::prelude::wasm_bindgen)]

#[must_use]

pub fn view() -> Vec<u8> {

match CORE.view() {

Ok(view) => view,

Err(e) => panic!("{e}"),

}

}The app

Now we are in a position to create a basic app in /shared/src/app.rs. This is

from the

simple Counter example

(which also has tests, although we're not showing them here).

// /shared/src/app.rs

use crux_core::{

macros::effect,

render::{render, RenderOperation},

App, Command,

};

use serde::{Deserialize, Serialize};

#[derive(Serialize, Deserialize, Clone, Debug)]

pub enum Event {

Increment,

Decrement,

Reset,

}

#[effect(typegen)]

pub enum Effect {

Render(RenderOperation),

}

#[derive(Default)]

pub struct Model {

count: isize,

}

#[derive(Serialize, Deserialize, Clone, Default)]

pub struct ViewModel {

pub count: String,

}

#[derive(Default)]

pub struct Counter;

impl App for Counter {

type Event = Event;

type Model = Model;

type ViewModel = ViewModel;

type Capabilities = (); // will be deprecated, so use unit type for now

type Effect = Effect;

fn update(

&self,

event: Self::Event,

model: &mut Self::Model,

_caps: &(), // will be deprecated, so prefix with underscore for now

) -> Command<Effect, Event> {

match event {

Event::Increment => model.count += 1,

Event::Decrement => model.count -= 1,

Event::Reset => model.count = 0,

}

render()

}

fn view(&self, model: &Self::Model) -> Self::ViewModel {

ViewModel {

count: format!("Count is: {}", model.count),

}

}

}The #[effect] macro can be used to annotate an enum to represent our effects. The enum has a variant for each effect, which carries the Operation type.

The real effect type generated by the macro is a little more complicated, with some plumbing to support the foreign function interface into Swift, Kotlin and other languages. You can read more about the effect system in the Managed Effects chapter of the guide.

The Capabilities associated type in the code above is an artifact of a migration of the effect API from

previous versions of Crux. You can use the unit type () and everything will work fine. We will

eventually remove this type and the last argument to the update function.

If you've got an existing app or you're simply curious about what this looked like before, you can read about it at the end of the Managed Effects chapter of the guide.

Make sure everything builds OK

cargo build

Create the shared types crate

This crate serves as the container for type generation for the foreign languages.

Work is being done to remove the need for this crate, but for now, it is needed in order to drive the generation of the types that cross the FFI boundary.

-

Copy over the shared_types folder from the simple_counter example.

-

Add the shared types crate to

workspace.membersin the/Cargo.tomlfile at the monorepo root. -

Edit the

build.rsfile and make sure that your app type is registered. In our example, the app type isCounter, so make sure you include this statement in yourbuild.rs

gen.register_app::<Counter>()?;The build.rs file should now look like this:

use crux_core::typegen::TypeGen;

use shared::Counter;

use std::path::PathBuf;

fn main() -> anyhow::Result<()> {

println!("cargo:rerun-if-changed=../shared");

let mut gen = TypeGen::new();

gen.register_app::<Counter>()?;

let output_root = PathBuf::from("./generated");

gen.swift("SharedTypes", output_root.join("swift"))?;

gen.java("com.crux.example.simple_counter", output_root.join("java"))?;

gen.typescript("shared_types", output_root.join("typescript"))?;

Ok(())

}If you are using the latest versions of the

crux_http (>= v0.10.0), crux_kv (>= v0.5.0) or crux_time (>= v0.5.0)

capabilities, you will need to add a build dependency to the capability crate,

with the typegen feature enabled — so your Cargo.toml file may end up looking something like this

(from the cat_facts example):

[package]

name = "shared_types"

version = "0.1.0"

authors.workspace = true

repository.workspace = true

edition.workspace = true

license.workspace = true

keywords.workspace = true

rust-version.workspace = true

[dependencies]

[build-dependencies]

anyhow.workspace = true

crux_core = { workspace = true, features = ["typegen"] }

crux_http = { workspace = true, features = ["typegen"] }

crux_kv = { workspace = true, features = ["typegen"] }

crux_time = { workspace = true, features = ["typegen"] }

shared = { path = "../shared", features = ["typegen"] }

Due to a current limitation with the reflection library,

you may need to manually register nested enum types in your build.rs file.

(see https://github.com/zefchain/serde-reflection/tree/main/serde-reflection#supported-features)

Note, you don't have to do this for the latest versions of the

crux_http (>= v0.10.0), crux_kv (>= v0.5.0) or crux_time (>= v0.5.0)

capabilities, which now do this registration for you — although you will need to add

a build dependency to the capability crate, with the typegen feature enabled.

If you do end up needing to register a type manually (you should get a helpful error to tell you this),

you can use the register_type method (e.g. gen.register_type::<TextCursor>()?;) as

shown in this

build.rs

file from the shared_types crate of the

notes example:

use crux_core::typegen::TypeGen;

use shared::{NoteEditor, TextCursor};

use std::path::PathBuf;

fn main() -> anyhow::Result<()> {

println!("cargo:rerun-if-changed=../shared");

let mut gen = TypeGen::new();

gen.register_app::<NoteEditor>()?;

// Note: currently required as we can't find enums inside enums, see:

// https://github.com/zefchain/serde-reflection/tree/main/serde-reflection#supported-features

gen.register_type::<TextCursor>()?;

let output_root = PathBuf::from("./generated");

gen.swift("SharedTypes", output_root.join("swift"))?;

// TODO these are for later

//

// gen.java("com.example.counter.shared_types", output_root.join("java"))?;

gen.typescript("shared_types", output_root.join("typescript"))?;

Ok(())

}Building your app

Make sure everything builds and foreign types get generated into the

generated folder.

(If you're generating TypeScript, you may need pnpm to be installed and in your $PATH.)

cargo build

If you have a Capabilities struct (i.e. you are not using the new #[effect] macro), and are having problems building, make sure your Capabilities struct also implements the Export trait.

There is a derive macro that can do this for you, e.g.:

#[cfg_attr(feature = "typegen", derive(crux_core::macros::Export))]

#[derive(crux_core::macros::Effect)]

pub struct Capabilities {

render: Render<Event>,

http: Http<Event>,

}The Export and Effect derive macros can be configured with the effect attribute if you need to specify a different name for the Effect type e.g.:

#[cfg_attr(feature = "typegen", derive(Export))]

#[derive(Effect)]

#[effect(name = "MyEffect")]

pub struct Capabilities {

render: Render<Event>,

pub_sub: PubSub<Event>,

}Additionally, if you are using a Capability that does not need to be exported to the foreign language, you can use the #[effect(skip)] attribute to skip exporting it, e.g.:

#[cfg_attr(feature = "typegen", derive(Export))]

#[derive(Effect)]

pub struct Capabilities {

render: Render<Event>,

#[effect(skip)]

compose: Compose<Event>,

}iOS

When we use Crux to build iOS apps, the Core API bindings are generated in Swift (with C headers) using Mozilla's Uniffi.

The shared core (that contains our app's behavior) is compiled to a static

library and linked into the iOS binary. To do this we use

cargo-xcode to generate an Xcode

project for the shared core, which we can include as a sub-project in our iOS

app.

The shared types are generated by Crux as a Swift package, which we can add to our iOS project as a dependency. The Swift code to serialize and deserialize these types across the boundary is also generated by Crux as Swift packages.

This section has two guides for building iOS apps with Crux:

We recommend the first option, as it's definitely the simplest way to get started.

iOS — Swift and SwiftUI — using XcodeGen

These are the steps to set up Xcode to build and run a simple iOS app that calls into a shared core.

We think that using XcodeGen may be the simplest way to create an Xcode project to build and run a simple iOS app that calls into a shared core. If you'd rather set up Xcode manually, you can jump to iOS — Swift and SwiftUI — manual setup, otherwise read on.

This walk-through assumes you have already added the shared and shared_types libraries to your repo — as described in Shared core and types.

Compile our Rust shared library

When we build our iOS app, we also want to build the Rust core as a static library so that it can be linked into the binary that we're going to ship.

We will use cargo-xcode to generate an

Xcode project for our shared library, which we can add as a sub-project in

Xcode.

Recent changes to cargo-xcode mean that we need to use version <=1.7.0 for

now.

If you don't have this already, you can install it in one of two ways:

-

Globally, with

cargo install --force cargo-xcode --version 1.7.0 -

Locally, using

cargo-run-bin, after ensuring that yourCargo.tomlhas the following lines (see The workspace and library manifests):[workspace.metadata.bin] cargo-xcode = { version = "=1.7.0" }Ensure you have

cargo-run-bin(and optionallycargo-binstall) installed:cargo install cargo-run-bin cargo-binstallThen, in the root of your app:

cargo bin --install # will be faster if `cargo-binstall` is installed cargo bin --sync-aliases # to use `cargo xcode` instead of `cargo bin xcode`

Let's generate the sub-project:

cargo xcode

This generates an Xcode project for each crate in the workspace, but we're only

interested in the one it creates in the shared directory. Don't open this

generated project yet, it'll be included when we generate the Xcode project for

our iOS app.

Generate the Xcode project for our iOS app

We will use XcodeGen to generate an Xcode project for our iOS app.

If you don't have this already, you can install it with brew install xcodegen.

Before we generate the Xcode project, we need to create some directories and a

project.yml file:

mkdir -p iOS/SimpleCounter

cd iOS

touch project.yml

The project.yml file describes the Xcode project we want to generate. Here's

one for the SimpleCounter example — you may want to adapt this for your own

project:

name: SimpleCounter

projectReferences:

Shared:

path: ../shared/shared.xcodeproj

packages:

SharedTypes:

path: ../shared_types/generated/swift/SharedTypes

options:

bundleIdPrefix: com.example.simple_counter

attributes:

BuildIndependentTargetsInParallel: true

targets:

SimpleCounter:

type: application

platform: iOS

deploymentTarget: "15.0"

sources:

- SimpleCounter

- path: ../shared/src/shared.udl

buildPhase: sources

dependencies:

- target: Shared/uniffi-bindgen-bin

- target: Shared/shared-staticlib

- package: SharedTypes

info:

path: SimpleCounter/Info.plist

properties:

UISupportedInterfaceOrientations:

- UIInterfaceOrientationPortrait

- UIInterfaceOrientationLandscapeLeft

- UIInterfaceOrientationLandscapeRight

UILaunchScreen: {}

settings:

OTHER_LDFLAGS: [-w]

SWIFT_OBJC_BRIDGING_HEADER: generated/sharedFFI.h

ENABLE_USER_SCRIPT_SANDBOXING: NO

buildRules:

- name: Generate FFI

filePattern: "*.udl"

script: |

#!/bin/bash

set -e

# Skip during indexing phase in XCode 13+

if [ "$ACTION" == "indexbuild" ]; then

echo "Not building *.udl files during indexing."

exit 0

fi

# Skip for preview builds

if [ "$ENABLE_PREVIEWS" = "YES" ]; then

echo "Not building *.udl files during preview builds."

exit 0

fi

cd "${INPUT_FILE_DIR}/.."

"${BUILD_DIR}/${CONFIGURATION}/uniffi-bindgen" generate "src/${INPUT_FILE_NAME}" --language swift --out-dir "${PROJECT_DIR}/generated"

outputFiles:

- $(PROJECT_DIR)/generated/$(INPUT_FILE_BASE).swift

- $(PROJECT_DIR)/generated/$(INPUT_FILE_BASE)FFI.h

runOncePerArchitecture: false

Then we can generate the Xcode project:

xcodegen

This should create an iOS/SimpleCounter/SimpleCounter.xcodeproj project file,

which we can open in Xcode. It should build OK, but we will need to add some

code!

Create some UI and run in the Simulator, or on an iPhone

There is slightly more advanced example of an iOS app in the Crux repository.

However, we will use the

simple counter example,

which has shared and shared_types libraries that will work with the

following example code.

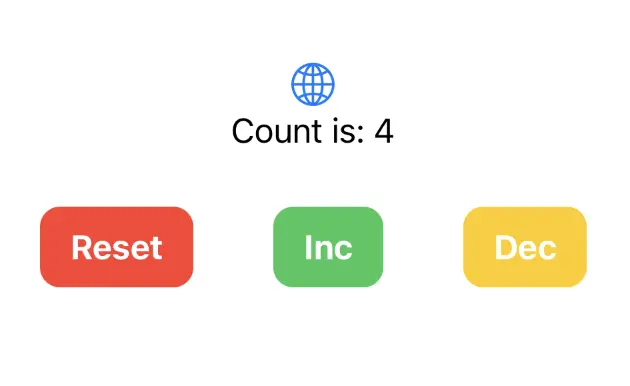

Simple counter example

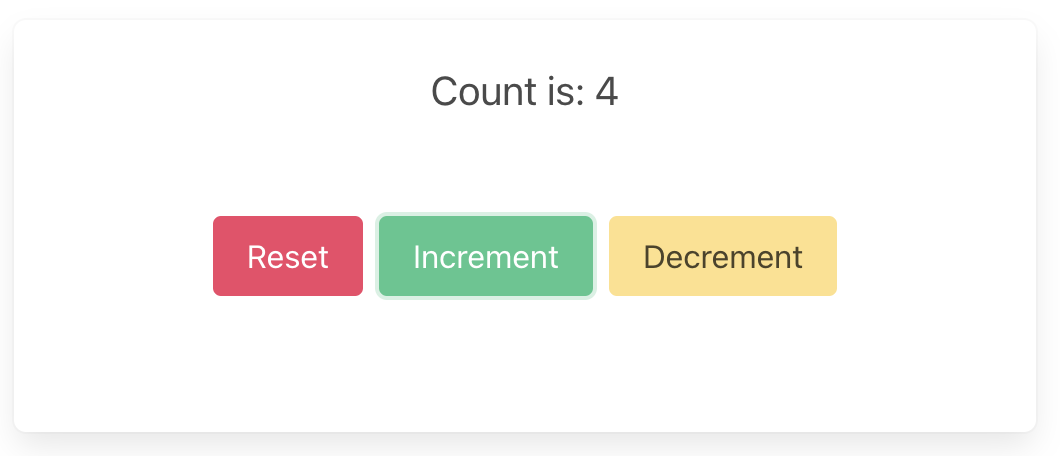

A simple app that increments, decrements and resets a counter.

Wrap the core to support capabilities

First, let's add some boilerplate code to wrap our core and handle the

capabilities that we are using. For this example, we only need to support the

Render capability, which triggers a render of the UI.

This code that wraps the core only needs to be written once — it only grows when we need to support additional capabilities.

Edit iOS/SimpleCounter/core.swift to look like the following. This code sends

our (UI-generated) events to the core, and handles any effects that the core

asks for. In this simple example, we aren't calling any HTTP APIs or handling

any side effects other than rendering the UI, so we just handle this render

effect by updating the published view model from the core.

import Foundation

import SharedTypes

@MainActor

class Core: ObservableObject {

@Published var view: ViewModel

init() {

self.view = try! .bincodeDeserialize(input: [UInt8](SimpleCounter.view()))

}

func update(_ event: Event) {

let effects = [UInt8](processEvent(Data(try! event.bincodeSerialize())))

let requests: [Request] = try! .bincodeDeserialize(input: effects)

for request in requests {

processEffect(request)

}

}

func processEffect(_ request: Request) {

switch request.effect {

case .render:

view = try! .bincodeDeserialize(input: [UInt8](SimpleCounter.view()))

}

}

}

That switch statement, above, is where you would handle any other effects that

your core might ask for. For example, if your core needs to make an HTTP

request, you would handle that here. To see an example of this, take a look at

the

counter example

in the Crux repository.

Edit iOS/SimpleCounter/ContentView.swift to look like the following:

import SharedTypes

import SwiftUI

struct ContentView: View {

@ObservedObject var core: Core

var body: some View {

VStack {

Image(systemName: "globe")

.imageScale(.large)

.foregroundColor(.accentColor)

Text(core.view.count)

HStack {

ActionButton(label: "Reset", color: .red) {

core.update(.reset)

}

ActionButton(label: "Inc", color: .green) {

core.update(.increment)

}

ActionButton(label: "Dec", color: .yellow) {

core.update(.decrement)

}

}

}

}

}

struct ActionButton: View {

var label: String

var color: Color

var action: () -> Void

init(label: String, color: Color, action: @escaping () -> Void) {

self.label = label

self.color = color

self.action = action

}

var body: some View {

Button(action: action) {

Text(label)

.fontWeight(.bold)

.font(.body)

.padding(EdgeInsets(top: 10, leading: 15, bottom: 10, trailing: 15))

.background(color)

.cornerRadius(10)

.foregroundColor(.white)

.padding()

}

}

}

struct ContentView_Previews: PreviewProvider {

static var previews: some View {

ContentView(core: Core())

}

}

And create iOS/SimpleCounter/SimpleCounterApp.swift to look like this:

import SwiftUI

@main

struct SimpleCounterApp: App {

var body: some Scene {

WindowGroup {

ContentView(core: Core())

}

}

}

Run xcodegen again to update the Xcode project with these newly created source

files (or add them manually in Xcode to the SimpleCounter group), and then

open iOS/SimpleCounter/SimpleCounter.xcodeproj in Xcode. You might need to

select the SimpleCounter scheme, and an appropriate simulator, in the

drop-down at the top, before you build.

You should then be able to run the app in the simulator or on an iPhone, and it should look like this:

iOS — Swift and SwiftUI — manual setup

These are the steps to set up Xcode to build and run a simple iOS app that calls into a shared core.

We recommend setting up Xcode with XcodeGen as described in the previous section. It is the simplest way to create an Xcode project to build and run a simple iOS app that calls into a shared core. However, if you want to set up Xcode manually then read on.

This walk-through assumes you have already added the shared and shared_types

libraries to your repo — as described in Shared core and types

— and that you have built them using cargo build.

Create an iOS App

The first thing we need to do is create a new iOS app in Xcode.

Let's call the app "SimpleCounter" and select "SwiftUI" for the interface and "Swift" for the language. If you choose to create the app in the root folder of your monorepo, then you might want to rename the folder it creates to "iOS". Your repo's directory structure might now look something like this (some files elided):

.

├── Cargo.lock

├── Cargo.toml

├── iOS

│ ├── SimpleCounter

│ │ ├── ContentView.swift

│ │ └── SimpleCounterApp.swift

│ └── SimpleCounter.xcodeproj

│ └── project.pbxproj

├── shared

│ ├── build.rs

│ ├── Cargo.toml

│ ├── src

│ │ ├── counter.rs

│ │ ├── lib.rs

│ │ └── shared.udl

│ └── uniffi.toml

├── shared_types

│ ├── build.rs

│ ├── Cargo.toml

│ └── src

│ └── lib.rs

└── target

Generate FFI bindings

We want UniFFI to create the Swift bindings and the C headers for our shared

library, and store them in a directory called generated.

To achieve this, we'll associate a script with files that match the pattern

*.udl (this will catch the interface definition file we created earlier), and

then add our shared.udl file to the project.

Note that our shared library generates the uniffi-bindgen binary (as explained

on the page "Shared core and types") that the script relies on, so

make sure you have built it already, using cargo build.

In "Build Rules", add a rule to process files that match the pattern *.udl

with the following script (and also uncheck "Run once per architecture").

#!/bin/bash

set -e

# Skip during indexing phase in XCode 13+

if [ "$ACTION" == "indexbuild" ]; then

echo "Not building *.udl files during indexing."

exit 0

fi

# Skip for preview builds

if [ "$ENABLE_PREVIEWS" = "YES" ]; then

echo "Not building *.udl files during preview builds."

exit 0

fi

cd "${INPUT_FILE_DIR}/.."

"${BUILD_DIR}/${CONFIGURATION}/uniffi-bindgen" generate "src/${INPUT_FILE_NAME}" --language swift --out-dir "${PROJECT_DIR}/generated"

We'll need to add the following as output files:

$(PROJECT_DIR)/generated/$(INPUT_FILE_BASE).swift

$(PROJECT_DIR)/generated/$(INPUT_FILE_BASE)FFI.h

Now go to the project settings, "Build Phases, Compile Sources", and add /shared/src/shared.udl

using the "add other" button, selecting "Create folder references".

You may also need to go to "Build Settings, User Script Sandboxing" and set this

to No to give the script permission to create files.

Build the project (cmd-B), which will fail, but the above script should run successfully and the "generated" folder should contain the generated Swift types and C header files:

$ ls iOS/generated

shared.swift sharedFFI.h sharedFFI.modulemap

Add the bridging header

In "Build Settings", search for "bridging header", and add

generated/sharedFFI.h, for any architecture/SDK, i.e. in both Debug and

Release. If there isn't already a setting for "bridging header" you can add one

(and then delete it) as per

this StackOverflow question

Compile our Rust shared library

When we build our iOS app, we also want to build the Rust core as a static library so that it can be linked into the binary that we're going to ship.

We will use cargo-xcode to generate an

Xcode project for our shared library, which we can add as a sub-project in

Xcode.

Recent changes to cargo-xcode mean that we need to use version <=1.7.0 for

now.

If you don't have this already, you can install it in one of two ways:

-

Globally, with

cargo install --force cargo-xcode --version 1.7.0 -

Locally, using

cargo-run-bin, after ensuring that yourCargo.tomlhas the following lines (see The workspace and library manifests):[workspace.metadata.bin] cargo-xcode = { version = "=1.7.0" }Ensure you have

cargo-run-bin(and optionallycargo-binstall) installed:cargo install cargo-run-bin cargo-binstallThen, in the root of your app:

cargo bin --install # will be faster if `cargo-binstall` is installed cargo bin --sync-aliases # to use `cargo xcode` instead of `cargo bin xcode`

Let's generate the sub-project:

cargo xcode

This generates an Xcode project for each crate in the workspace, but we're only

interested in the one it creates in the shared directory. Don't open this

generated project yet.

Using Finder, drag the shared/shared.xcodeproj folder under the Xcode project

root.

Then, in the "Build Phases, Link Binary with Libraries" section, add the

libshared_static.a library (you should be able to navigate to it as

Workspace -> shared -> libshared_static.a)

Add the Shared Types

Using Finder, drag the shared_types/generated/swift/SharedTypes folder under

the Xcode project root.

Then, in the "Build Phases, Link Binary with Libraries" section, add the

SharedTypes library (you should be able to navigate to it as

Workspace -> SharedTypes -> SharedTypes)

Create some UI and run in the Simulator, or on an iPhone

There is slightly more advanced example of an iOS app in the Crux repository.

However, we will use the

simple counter example,

which has shared and shared_types libraries that will work with the

following example code.

Simple counter example

A simple app that increments, decrements and resets a counter.

Wrap the core to support capabilities

First, let's add some boilerplate code to wrap our core and handle the

capabilities that we are using. For this example, we only need to support the

Render capability, which triggers a render of the UI.

This code that wraps the core only needs to be written once — it only grows when we need to support additional capabilities.

Edit iOS/SimpleCounter/core.swift to look like the following. This code sends

our (UI-generated) events to the core, and handles any effects that the core

asks for. In this simple example, we aren't calling any HTTP APIs or handling

any side effects other than rendering the UI, so we just handle this render

effect by updating the published view model from the core.

import Foundation

import SharedTypes

@MainActor

class Core: ObservableObject {

@Published var view: ViewModel

init() {

self.view = try! .bincodeDeserialize(input: [UInt8](SimpleCounter.view()))

}

func update(_ event: Event) {

let effects = [UInt8](processEvent(Data(try! event.bincodeSerialize())))

let requests: [Request] = try! .bincodeDeserialize(input: effects)

for request in requests {

processEffect(request)

}

}

func processEffect(_ request: Request) {

switch request.effect {

case .render:

view = try! .bincodeDeserialize(input: [UInt8](SimpleCounter.view()))

}

}

}

That switch statement, above, is where you would handle any other effects that

your core might ask for. For example, if your core needs to make an HTTP

request, you would handle that here. To see an example of this, take a look at

the

counter example

in the Crux repository.

Edit iOS/SimpleCounter/ContentView.swift to look like the following:

import SharedTypes

import SwiftUI

struct ContentView: View {

@ObservedObject var core: Core

var body: some View {

VStack {

Image(systemName: "globe")

.imageScale(.large)

.foregroundColor(.accentColor)

Text(core.view.count)

HStack {

ActionButton(label: "Reset", color: .red) {

core.update(.reset)

}

ActionButton(label: "Inc", color: .green) {

core.update(.increment)

}

ActionButton(label: "Dec", color: .yellow) {

core.update(.decrement)

}

}

}

}

}

struct ActionButton: View {

var label: String

var color: Color

var action: () -> Void

init(label: String, color: Color, action: @escaping () -> Void) {

self.label = label

self.color = color

self.action = action

}

var body: some View {

Button(action: action) {

Text(label)

.fontWeight(.bold)

.font(.body)

.padding(EdgeInsets(top: 10, leading: 15, bottom: 10, trailing: 15))

.background(color)

.cornerRadius(10)

.foregroundColor(.white)

.padding()

}

}

}

struct ContentView_Previews: PreviewProvider {

static var previews: some View {

ContentView(core: Core())

}

}

And create iOS/SimpleCounter/SimpleCounterApp.swift to look like this:

import SwiftUI

@main

struct SimpleCounterApp: App {

var body: some Scene {

WindowGroup {

ContentView(core: Core())

}

}

}

You should then be able to run the app in the simulator or on an iPhone, and it should look like this:

Android

When we use Crux to build Android apps, the Core API bindings are generated in Java using Mozilla's Uniffi.

The shared core (that contains our app's behavior) is compiled to a dynamic library, using Mozilla's Rust gradle plugin for Android and the Android NDK. The library is loaded at runtime using Java Native Access.

The shared types are generated by Crux as Java packages, which we can add to our

Android project using sourceSets. The Java code to serialize and deserialize

these types across the boundary is also generated by Crux as Java packages.

This section has a guide for building Android apps with Crux:

Android — Kotlin and Jetpack Compose

These are the steps to set up Android Studio to build and run a simple Android app that calls into a shared core.

This walk-through assumes you have already added the shared and shared_types libraries to your repo, as described in Shared core and types.

We want to make setting up Android Studio to work with Crux really easy. As time progresses we will try to simplify and automate as much as possible, but at the moment there is some manual configuration to do. This only needs doing once, so we hope it's not too much trouble. If you know of any better ways than those we describe below, please either raise an issue (or a PR) at https://github.com/redbadger/crux.

This walkthrough uses Mozilla's excellent Rust gradle plugin

for Android, which uses Python. However, pipes has recently been removed from Python (since Python 3.13)

so you may encounter an error linking your shared library.

If you hit this problem, you can either:

- use an older Python (<3.13)

- wait for a fix (see this issue)

- or use a different plugin — there is a PR in the Crux repo that

explores the use of

cargo-ndkand thecargo-ndk-androidplugin that may be useful.

Create an Android App

The first thing we need to do is create a new Android app in Android Studio.

Open Android Studio and create a new project, for "Phone and Tablet", of type "Empty Activity". In this walk-through, we'll call it "SimpleCounter"

- "Name":

SimpleCounter - "Package name":

com.example.simple_counter - "Save Location": a directory called

Androidat the root of our monorepo - "Minimum SDK"

API 34 - "Build configuration language":

Groovy DSL (build.gradle)

Your repo's directory structure might now look something like this (some files elided):

.

├── Android

│ ├── app

│ │ ├── build.gradle

│ │ ├── libs

│ │ └── src

│ │ └── main

│ │ ├── AndroidManifest.xml

│ │ └── java

│ │ └── com

│ │ └── example

│ │ └── simple_counter

│ │ └── MainActivity.kt

│ ├── build.gradle

│ ├── gradle.properties

│ ├── local.properties

│ └── settings.gradle

├── Cargo.lock

├── Cargo.toml

├── shared

│ ├── build.rs

│ ├── Cargo.toml

│ ├── src

│ │ ├── app.rs

│ │ ├── lib.rs

│ │ └── shared.udl

│ └── uniffi.toml

├── shared_types

│ ├── build.rs

│ ├── Cargo.toml

│ └── src

│ └── lib.rs

└── target

Add a Kotlin Android Library

This shared Android library (aar) is going to wrap our shared Rust library.

Under File -> New -> New Module, choose "Android Library" and give it the "Module name"

shared. Set the "Package name" to match the one from your

/shared/uniffi.toml, which in this example is com.example.simple_counter.shared.

Again, set the "Build configuration language" to Groovy DSL (build.gradle).

For more information on how to add an Android library see https://developer.android.com/studio/projects/android-library.

We can now add this library as a dependency of our app.

Edit the app's build.gradle (/Android/app/build.gradle) to look like

this:

plugins {

alias(libs.plugins.android.application)

alias(libs.plugins.kotlin.android)

alias(libs.plugins.kotlin.compose)

}

android {

namespace 'com.example.simple_counter'

compileSdk 35

defaultConfig {

applicationId "com.example.simple_counter"

minSdk 34

targetSdk 34

versionCode 1

versionName "1.0"

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'

}

}

compileOptions {

sourceCompatibility JavaVersion.VERSION_20

targetCompatibility JavaVersion.VERSION_20

}

kotlinOptions {

jvmTarget = '20'

}

buildFeatures {

compose true

}

}

dependencies {

// our shared library

implementation project(path: ':shared')

// added dependencies

implementation libs.lifecycle.viewmodel.compose

// original dependencies

implementation libs.androidx.core.ktx

implementation libs.androidx.lifecycle.runtime.ktx

implementation libs.androidx.activity.compose

implementation platform(libs.androidx.compose.bom)

implementation libs.androidx.ui

implementation libs.androidx.ui.graphics

implementation libs.androidx.ui.tooling.preview

implementation libs.androidx.material3

testImplementation libs.junit

androidTestImplementation libs.androidx.junit

androidTestImplementation libs.androidx.espresso.core

androidTestImplementation platform(libs.androidx.compose.bom)

androidTestImplementation libs.androidx.ui.test.junit4

debugImplementation libs.androidx.ui.tooling

debugImplementation libs.androidx.ui.test.manifest

}

In our gradle files, we are referencing a "Version Catalog" to manage our dependency versions, so you will need to ensure this is kept up to date.

Our catalog (Android/gradle/libs.versions.toml) will end up looking like this:

[versions]

agp = "8.9.1"

kotlin = "2.0.0"

coreKtx = "1.15.0"

junit = "4.13.2"

junitVersion = "1.2.1"

espressoCore = "3.6.1"

lifecycleRuntimeKtx = "2.8.7"

activityCompose = "1.10.1"

composeBom = "2025.03.00"

# added

jna = "5.15.0"

lifecycle = "2.8.7"

appcompat = "1.7.0"

material = "1.12.0"

rustAndroid = "0.9.6"

[libraries]

androidx-core-ktx = { group = "androidx.core", name = "core-ktx", version.ref = "coreKtx" }

junit = { group = "junit", name = "junit", version.ref = "junit" }

androidx-junit = { group = "androidx.test.ext", name = "junit", version.ref = "junitVersion" }

androidx-espresso-core = { group = "androidx.test.espresso", name = "espresso-core", version.ref = "espressoCore" }

androidx-lifecycle-runtime-ktx = { group = "androidx.lifecycle", name = "lifecycle-runtime-ktx", version.ref = "lifecycleRuntimeKtx" }

androidx-activity-compose = { group = "androidx.activity", name = "activity-compose", version.ref = "activityCompose" }

androidx-compose-bom = { group = "androidx.compose", name = "compose-bom", version.ref = "composeBom" }

androidx-ui = { group = "androidx.compose.ui", name = "ui" }

androidx-ui-graphics = { group = "androidx.compose.ui", name = "ui-graphics" }

androidx-ui-tooling = { group = "androidx.compose.ui", name = "ui-tooling" }

androidx-ui-tooling-preview = { group = "androidx.compose.ui", name = "ui-tooling-preview" }

androidx-ui-test-manifest = { group = "androidx.compose.ui", name = "ui-test-manifest" }

androidx-ui-test-junit4 = { group = "androidx.compose.ui", name = "ui-test-junit4" }

androidx-material3 = { group = "androidx.compose.material3", name = "material3" }

# added

jna = { module = "net.java.dev.jna:jna", version.ref = "jna" }

lifecycle-viewmodel-compose = { module = "androidx.lifecycle:lifecycle-viewmodel-compose", version.ref = "lifecycle" }

androidx-appcompat = { group = "androidx.appcompat", name = "appcompat", version.ref = "appcompat" }

material = { group = "com.google.android.material", name = "material", version.ref = "material" }

[plugins]

android-application = { id = "com.android.application", version.ref = "agp" }

kotlin-android = { id = "org.jetbrains.kotlin.android", version.ref = "kotlin" }

kotlin-compose = { id = "org.jetbrains.kotlin.plugin.compose", version.ref = "kotlin" }

# added

rust-android = { id = "org.mozilla.rust-android-gradle.rust-android", version.ref = "rustAndroid" }

android-library = { id = "com.android.library", version.ref = "agp" }

The Rust shared library

We'll use the following tools to incorporate our Rust shared library into the Android library added above. This includes compiling and linking the Rust dynamic library and generating the runtime bindings and the shared types.

- The Android NDK

- Mozilla's Rust gradle plugin

for Android

- This plugin depends on Python 3, make sure you have a version installed

- Java Native Access

- Uniffi to generate Java bindings

The NDK can be installed from "Tools, SDK Manager, SDK Tools" in Android Studio.

Let's get started.

Add the four rust android toolchains to your system:

$ rustup target add aarch64-linux-android armv7-linux-androideabi i686-linux-android x86_64-linux-android

Edit the project's build.gradle (/Android/build.gradle) to look like

this:

// Top-level build file where you can add configuration options common to all sub-projects/modules.

plugins {

alias(libs.plugins.android.application) apply false

alias(libs.plugins.kotlin.android) apply false

alias(libs.plugins.kotlin.compose) apply false

alias(libs.plugins.android.library) apply false

alias(libs.plugins.rust.android) apply false

}

Edit the library's build.gradle (/Android/shared/build.gradle) to look

like this:

plugins {

alias(libs.plugins.android.library)

alias(libs.plugins.kotlin.android)

}

android {

namespace 'com.example.simple_counter.shared'

compileSdk 35

ndkVersion "28.0.13004108"

defaultConfig {

minSdk 34

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

consumerProguardFiles "consumer-rules.pro"

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'

}

}

compileOptions {

sourceCompatibility JavaVersion.VERSION_20

targetCompatibility JavaVersion.VERSION_20

}

kotlinOptions {

jvmTarget = '20'

}

sourceSets {

main.java.srcDirs += "${projectDir}/../../shared_types/generated/java"

}

}

dependencies {

// added

implementation(libs.jna) {

artifact {

type = "aar"

}

}

// original

implementation libs.androidx.core.ktx

implementation libs.androidx.appcompat

implementation libs.material

testImplementation libs.junit

androidTestImplementation libs.androidx.junit

androidTestImplementation libs.androidx.espresso.core

}

apply plugin: 'org.mozilla.rust-android-gradle.rust-android'

cargo {

module = "../.."

libname = "shared"

profile = "debug"

// these are the four recommended targets for Android that will ensure your library works on all mainline android devices

// make sure you have included the rust toolchain for each of these targets: \

// `rustup target add aarch64-linux-android armv7-linux-androideabi i686-linux-android x86_64-linux-android`

targets = ["arm", "arm64", "x86", "x86_64"]

extraCargoBuildArguments = ['--package', 'shared']

cargoCommand = System.getProperty("user.home") + "/.cargo/bin/cargo"

rustcCommand = System.getProperty("user.home") + "/.cargo/bin/rustc"

pythonCommand = "python3"

}

afterEvaluate {

// The `cargoBuild` task isn't available until after evaluation.

android.libraryVariants.configureEach { variant ->

def productFlavor = ""

variant.productFlavors.each {

productFlavor += "${it.name.capitalize()}"

}

def buildType = "${variant.buildType.name.capitalize()}"

tasks.named("preBuild") {

it.dependsOn(tasks.named("typesGen"), tasks.named("bindGen"))

}

tasks.named("generate${productFlavor}${buildType}Assets") {

it.dependsOn(tasks.named("cargoBuild"))

}

// The below dependsOn is needed till https://github.com/mozilla/rust-android-gradle/issues/85 is resolved this fix was got from #118

tasks.withType(com.nishtahir.CargoBuildTask).forEach { buildTask ->

tasks.withType(com.android.build.gradle.tasks.MergeSourceSetFolders).configureEach {

it.inputs.dir(new File(new File(buildDir, "rustJniLibs"), buildTask.toolchain.folder))

it.dependsOn(buildTask)

}

}

}

}

// The below dependsOn is needed till https://github.com/mozilla/rust-android-gradle/issues/85 is resolved this fix was got from #118

tasks.matching { it.name.matches(/merge.*JniLibFolders/) }.configureEach {

it.inputs.dir(new File(buildDir, "rustJniLibs/android"))

it.dependsOn("cargoBuild")

}

tasks.register('bindGen', Exec) {

def outDir = "${projectDir}/../../shared_types/generated/java"

workingDir "../../"

if (System.getProperty('os.name').toLowerCase().contains('windows')) {

commandLine("cmd", "/c",

"cargo build -p shared && " + "target\\debug\\uniffi-bindgen generate shared\\src\\shared.udl " + "--language kotlin " + "--out-dir " + outDir.replace('/', '\\'))

} else {

commandLine("sh", "-c",

"""\

cargo build -p shared && \

target/debug/uniffi-bindgen generate shared/src/shared.udl \

--language kotlin \

--out-dir $outDir

""")

}

}

tasks.register('typesGen', Exec) {

workingDir "../../"

if (System.getProperty('os.name').toLowerCase().contains('windows')) {

commandLine("cmd", "/c", "cargo build -p shared_types")

} else {

commandLine("sh", "-c", "cargo build -p shared_types")

}

}

You will need to set the ndkVersion to one you have installed, go to "Tools, SDK Manager, SDK Tools" and check "Show Package Details" to get your installed version, or to install the version matching build.gradle above.

If you now build your project you should see the newly built shared library object file.

$ ls --tree Android/shared/build/rustJniLibs

Android/shared/build/rustJniLibs

└── android

└── arm64-v8a

└── libshared.so

└── armeabi-v7a

└── libshared.so

└── x86

└── libshared.so

└── x86_64

└── libshared.so

You should also see the generated types — note that the sourceSets directive

in the shared library gradle file (above) allows us to build our shared library

against the generated types in the shared_types/generated folder.

$ ls --tree shared_types/generated/java

shared_types/generated/java

└── com

├── example

│ └── simple_counter

│ ├── shared

│ │ └── shared.kt

│ └── shared_types

│ ├── Effect.java

│ ├── Event.java

│ ├── RenderOperation.java

│ ├── Request.java

│ ├── Requests.java

│ ├── TraitHelpers.java

│ └── ViewModel.java

└── novi

├── bincode

│ ├── BincodeDeserializer.java

│ └── BincodeSerializer.java

└── serde

├── ArrayLen.java

├── BinaryDeserializer.java

├── BinarySerializer.java

├── Bytes.java

├── DeserializationError.java

├── Deserializer.java

├── Int128.java

├── SerializationError.java

├── Serializer.java

├── Slice.java

├── Tuple2.java

├── Tuple3.java

├── Tuple4.java

├── Tuple5.java

├── Tuple6.java

├── Unit.java

└── Unsigned.java

Create some UI and run in the Simulator

There is a slightly more advanced example of an Android app in the Crux repository.

However, we will use the

simple counter example,

which has shared and shared_types libraries that will work with the

following example code.

Simple counter example

A simple app that increments, decrements and resets a counter.

Wrap the core to support capabilities

First, let's add some boilerplate code to wrap our core and handle the

capabilities that we are using. For this example, we only need to support the

Render capability, which triggers a render of the UI.

Let's create a file "File, New, Kotlin Class/File, File" called Core.

This code that wraps the core only needs to be written once — it only grows when we need to support additional capabilities.

Edit Android/app/src/main/java/com/example/simple_counter/Core.kt to look like

the following. This code sends our (UI-generated) events to the core, and

handles any effects that the core asks for. In this simple example, we aren't

calling any HTTP APIs or handling any side effects other than rendering the UI,

so we just handle this render effect by updating the published view model from

the core.

package com.example.simple_counter

import androidx.compose.runtime.getValue

import androidx.compose.runtime.mutableStateOf

import androidx.compose.runtime.setValue

import com.crux.example.simple_counter.Effect

import com.crux.example.simple_counter.Event

import com.crux.example.simple_counter.Request

import com.crux.example.simple_counter.Requests

import com.crux.example.simple_counter.ViewModel

import com.example.simple_counter.shared.processEvent

import com.example.simple_counter.shared.view

class Core : androidx.lifecycle.ViewModel() {

var view: ViewModel? by mutableStateOf(null)

private set

fun update(event: Event) {

val effects = processEvent(event.bincodeSerialize())

val requests = Requests.bincodeDeserialize(effects)

for (request in requests) {

processEffect(request)

}

}

private fun processEffect(request: Request) {

when (request.effect) {

is Effect.Render -> {

this.view = ViewModel.bincodeDeserialize(view())

}

}

}

}

That when statement, above, is where you would handle any other effects that

your core might ask for. For example, if your core needs to make an HTTP

request, you would handle that here. To see an example of this, take a look at

the

counter example

in the Crux repository.

Edit /Android/app/src/main/java/com/example/simple_counter/MainActivity.kt to

look like the following:

package com.example.simple_counter

import android.os.Bundle

import androidx.activity.ComponentActivity

import androidx.activity.compose.setContent

import androidx.compose.foundation.layout.Arrangement

import androidx.compose.foundation.layout.Column

import androidx.compose.foundation.layout.Row

import androidx.compose.foundation.layout.fillMaxSize

import androidx.compose.foundation.layout.padding

import androidx.compose.material3.Button

import androidx.compose.material3.ButtonDefaults

import androidx.compose.material3.MaterialTheme

import androidx.compose.material3.Surface

import androidx.compose.material3.Text

import androidx.compose.runtime.Composable

import androidx.compose.ui.Alignment

import androidx.compose.ui.Modifier

import androidx.compose.ui.graphics.Color

import androidx.compose.ui.tooling.preview.Preview

import androidx.compose.ui.unit.dp

import androidx.lifecycle.viewmodel.compose.viewModel

import com.crux.example.simple_counter.Event

import com.example.simple_counter.ui.theme.SimpleCounterTheme

class MainActivity : ComponentActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContent {

SimpleCounterTheme {

Surface(

modifier = Modifier.fillMaxSize(),

color = MaterialTheme.colorScheme.background

) { View() }

}

}

}

}

@Composable

fun View(core: Core = viewModel()) {

Column(

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center,

modifier = Modifier.fillMaxSize().padding(10.dp),

) {

Text(text = (core.view?.count ?: "0").toString(), modifier = Modifier.padding(10.dp))

Row(horizontalArrangement = Arrangement.spacedBy(10.dp)) {

Button(

onClick = { core.update(Event.Reset()) },

colors =

ButtonDefaults.buttonColors(

containerColor = MaterialTheme.colorScheme.error

)

) { Text(text = "Reset", color = Color.White) }

Button(

onClick = { core.update(Event.Increment()) },

colors =

ButtonDefaults.buttonColors(

containerColor = MaterialTheme.colorScheme.primary

)

) { Text(text = "Increment", color = Color.White) }

Button(

onClick = { core.update(Event.Decrement()) },

colors =

ButtonDefaults.buttonColors(

containerColor = MaterialTheme.colorScheme.secondary

)

) { Text(text = "Decrement", color = Color.White) }

}

}

}

@Preview(showBackground = true)

@Composable

fun DefaultPreview() {

SimpleCounterTheme { View() }

}

Web

When we use Crux to build Web apps, the shared core is compiled to WebAssembly. This has the advantage of sandboxing the core, physically preventing it from performing any side-effects (which is conveniently one of the main goals of Crux anyway!). The invariants of Crux are actually enforced by the WebAssembly runtime.

We do have to decide how much of our app we want to include in the WebAssembly binary, though. Typically, if we are writing our UI in TypeScript (or JavaScript) we would just compile our shared behavior and the Crux Core to WebAssembly. However, if we are writing our UI in Rust we can compile the entire app to WebAssembly.

Web apps with TypeScript UI

When building UI with React, or any other JS/TS framework, the Core API bindings are generated in TypeScript using Mozilla's Uniffi, and, just like with Android and iOS we must serialize and deserialize the messages into and out of the WebAssembly binary.

The shared core (that contains our app's behavior) is compiled to a WebAssembly

binary, using wasm-pack, which

creates an npm package for us that we can add to our project just like any other

npm package.

The shared types are also generated by Crux as a TypeScript npm package, which

we can add in the same way (e.g. with pnpm add).

This section has two guides for building TypeScript UI with Crux:

Web apps with Rust UI

When building UI with Rust, we can compile the entire app to WebAssembly, and

reference the core and the shared crate directly. We do not have to serialize

and deserialize messages, because the messages stay in the same memory space.

The shared core (that contains our app's behavior) and the UI code are

compiled to a WebAssembly binary, using the relevant toolchain for the language

and framework we are using. We use trunk for the Yew

and Leptos guides and dx

for the Dioxus guide.

When using Rust throughout, we can simply use Cargo to add the shared crate

directly to our app.

This section has three guides for building Rust UI with Crux:

Web — TypeScript and React (Next.js)

These are the steps to set up and run a simple TypeScript Web app that calls into a shared core.

This walk-through assumes you have already added the shared and shared_types libraries to your repo, as described in Shared core and types.

Create a Next.js App

For this walk-through, we'll use the pnpm package manager

for no reason other than we like it the most!

Let's create a simple Next.js app for TypeScript, using pnpx (from pnpm).

You can probably accept the defaults.

pnpx create-next-app@latest

Compile our Rust shared library

When we build our app, we also want to compile the Rust core to WebAssembly so that it can be referenced from our code.

To do this, we'll use

wasm-pack, which you can

install like this:

# with homebrew

brew install wasm-pack

# or directly

curl https://rustwasm.github.io/wasm-pack/installer/init.sh -sSf | sh

Now that we have wasm-pack installed, we can build our shared library to

WebAssembly for the browser.

(cd shared && wasm-pack build --target web)

You might want to add a wasm:build script to your package.json

file, and call it when you build your nextjs project.

{

"scripts": {

"build": "pnpm run wasm:build && next build",

"dev": "pnpm run wasm:build && next dev",

"wasm:build": "cd ../shared && wasm-pack build --target web"

}

}

Add the shared library as a Wasm package to your web-nextjs project

cd web-nextjs

pnpm add ../shared/pkg

Add the Shared Types

To generate the shared types for TypeScript, we can just run cargo build from

the root of our repository. You can check that they have been generated

correctly:

ls --tree shared_types/generated/typescript

shared_types/generated/typescript

├── bincode

│ ├── bincodeDeserializer.d.ts

│ ├── bincodeDeserializer.js

│ ├── bincodeDeserializer.ts

│ ├── bincodeSerializer.d.ts

│ ├── bincodeSerializer.js

│ ├── bincodeSerializer.ts

│ ├── mod.d.ts

│ ├── mod.js

│ └── mod.ts

├── node_modules

│ └── typescript -> .pnpm/typescript@4.8.4/node_modules/typescript

├── package.json

├── pnpm-lock.yaml

├── serde

│ ├── binaryDeserializer.d.ts

│ ├── binaryDeserializer.js

│ ├── binaryDeserializer.ts

│ ├── binarySerializer.d.ts

│ ├── binarySerializer.js

│ ├── binarySerializer.ts

│ ├── deserializer.d.ts

│ ├── deserializer.js

│ ├── deserializer.ts

│ ├── mod.d.ts

│ ├── mod.js

│ ├── mod.ts

│ ├── serializer.d.ts

│ ├── serializer.js

│ ├── serializer.ts

│ ├── types.d.ts

│ ├── types.js

│ └── types.ts

├── tsconfig.json

└── types

├── shared_types.d.ts

├── shared_types.js

└── shared_types.ts

You can see that it also generates an npm package that we can add directly to

our project.

pnpm add ../shared_types/generated/typescript

Create some UI

There are other, more advanced, examples of Next.js apps in the Crux repository.

However, we will use the simple counter example, which has shared and shared_types libraries that will work with the following example code.

Simple counter example

A simple app that increments, decrements and resets a counter.

Wrap the core to support capabilities

First, let's add some boilerplate code to wrap our core and handle the

capabilities that we are using. For this example, we only need to support the

Render capability, which triggers a render of the UI.

This code that wraps the core only needs to be written once — it only grows when we need to support additional capabilities.

Edit src/app/core.ts to look like the following. This code sends our

(UI-generated) events to the core, and handles any effects that the core asks

for. In this simple example, we aren't calling any HTTP APIs or handling any

side effects other than rendering the UI, so we just handle this render effect

by updating the component's view hook with the core's ViewModel.

Notice that we have to serialize and deserialize the data that we pass between the core and the shell. This is because the core is running in a separate WebAssembly instance, and so we can't just pass the data directly.

import type { Dispatch, SetStateAction } from "react";

import { process_event, view } from "shared/shared";

import type { Effect, Event } from "shared_types/types/shared_types";

import {

EffectVariantRender,

ViewModel,

Request,

} from "shared_types/types/shared_types";

import {

BincodeSerializer,

BincodeDeserializer,

} from "shared_types/bincode/mod";

export function update(

event: Event,

callback: Dispatch<SetStateAction<ViewModel>>,

) {

console.log("event", event);

const serializer = new BincodeSerializer();

event.serialize(serializer);

const effects = process_event(serializer.getBytes());

const requests = deserializeRequests(effects);

for (const { id, effect } of requests) {

processEffect(id, effect, callback);

}

}

function processEffect(

_id: number,

effect: Effect,

callback: Dispatch<SetStateAction<ViewModel>>,

) {

console.log("effect", effect);

switch (effect.constructor) {

case EffectVariantRender: {

callback(deserializeView(view()));

break;

}

}

}

function deserializeRequests(bytes: Uint8Array): Request[] {

const deserializer = new BincodeDeserializer(bytes);

const len = deserializer.deserializeLen();

const requests: Request[] = [];

for (let i = 0; i < len; i++) {

const request = Request.deserialize(deserializer);

requests.push(request);

}

return requests;

}

function deserializeView(bytes: Uint8Array): ViewModel {

return ViewModel.deserialize(new BincodeDeserializer(bytes));

}

That switch statement, above, is where you would handle any other effects that

your core might ask for. For example, if your core needs to make an HTTP

request, you would handle that here. To see an example of this, take a look at

the

counter example

in the Crux repository.

Create a component to render the UI

Edit src/app/page.tsx to look like the following. This code loads the

WebAssembly core and sends it an initial event. Notice that we pass the

setState hook to the update function so that we can update the state in

response to a render effect from the core.

"use client";

import type { NextPage } from "next";

import Head from "next/head";

import { useEffect, useRef, useState } from "react";

import init_core from "shared/shared";

import {

ViewModel,

EventVariantReset,

EventVariantIncrement,

EventVariantDecrement,

} from "shared_types/types/shared_types";

import { update } from "./core";

const Home: NextPage = () => {

const [view, setView] = useState(new ViewModel("0"));

const initialized = useRef(false);

useEffect(

() => {

if (!initialized.current) {

initialized.current = true;

init_core().then(() => {

// Initial event

update(new EventVariantReset(), setView);

});

}

},

// eslint-disable-next-line react-hooks/exhaustive-deps

/*once*/ []

);

return (

<>

<Head>

<title>Next.js Counter</title>

</Head>

<main>

<section className="box container has-text-centered m-5">

<p className="is-size-5">{view.count}</p>

<div className="buttons section is-centered">

<button

className="button is-primary is-danger"

onClick={() => update(new EventVariantReset(), setView)}

>

{"Reset"}

</button>

<button

className="button is-primary is-success"

onClick={() => update(new EventVariantIncrement(), setView)}

>

{"Increment"}

</button>

<button

className="button is-primary is-warning"

onClick={() => update(new EventVariantDecrement(), setView)}

>

{"Decrement"}

</button>

</div>

</section>

</main>

</>

);

};

export default Home;

Now all we need is some CSS. First add the Bulma package, and then import it

in layout.tsx.

pnpm add bulma

import "bulma/css/bulma.css";

import type { Metadata } from "next";

import { Inter } from "next/font/google";

const inter = Inter({ subsets: ["latin"] });

export const metadata: Metadata = {

title: "Crux Simple Counter Example",

description: "Rust Core, TypeScript Shell (NextJS)",

};

export default function RootLayout({

children,

}: {

children: React.ReactNode;

}) {

return (

<html lang="en">

<body className={inter.className}>{children}</body>

</html>

);

}

Build and serve our app

We can build our app, and serve it for the browser, in one simple step.

pnpm dev

Web — TypeScript and React (Remix)

These are the steps to set up and run a simple TypeScript Web app that calls into a shared core.

This walk-through assumes you have already added the shared and shared_types libraries to your repo, as described in Shared core and types.

Create a Remix App

For this walk-through, we'll use the pnpm package manager